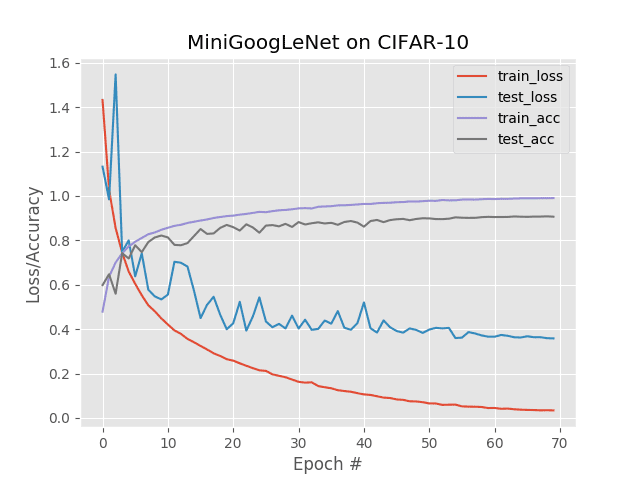

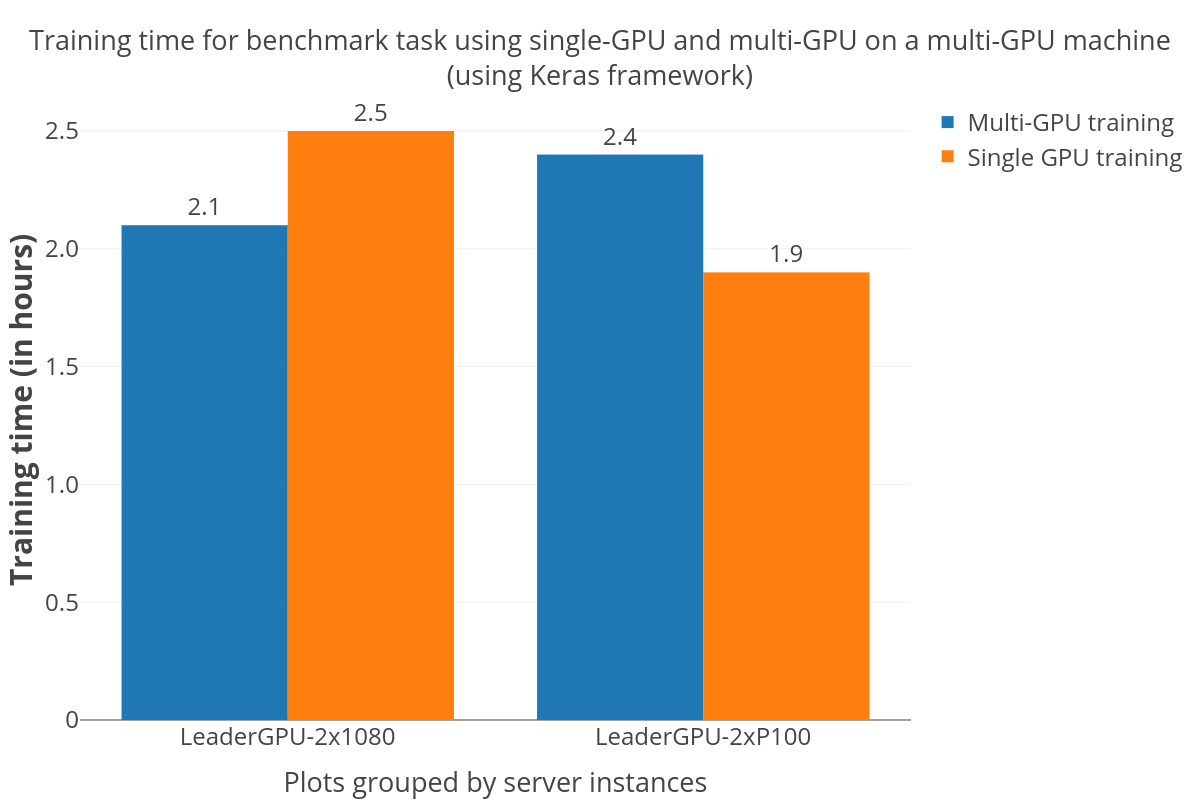

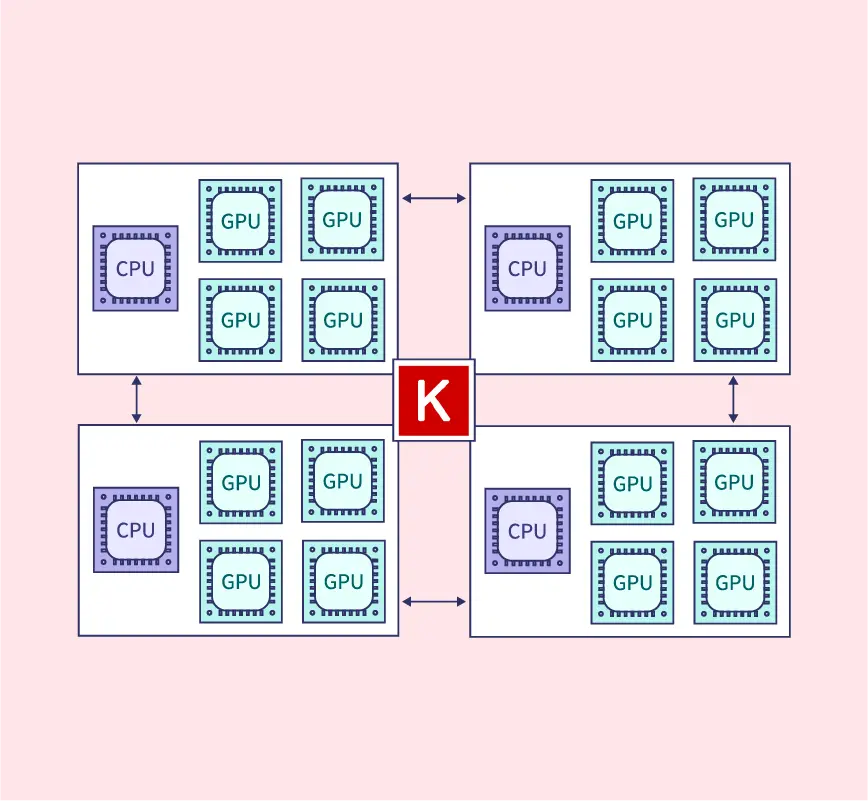

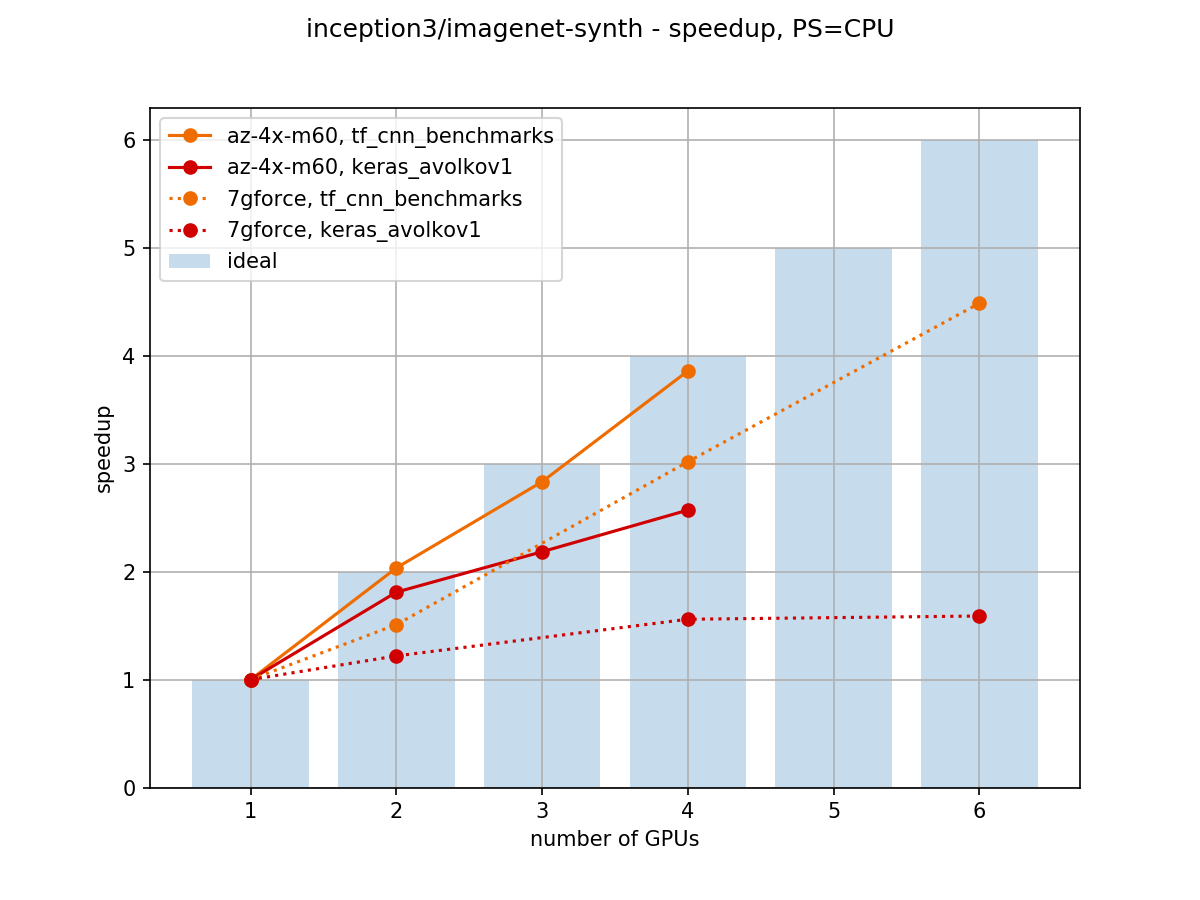

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

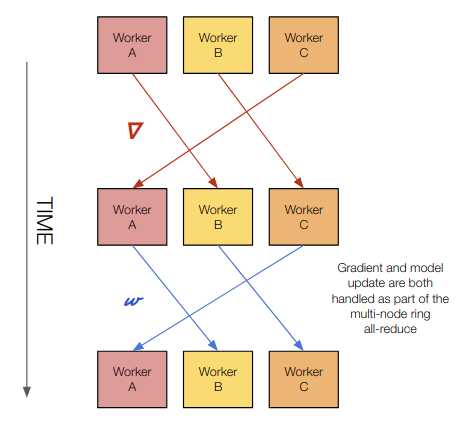

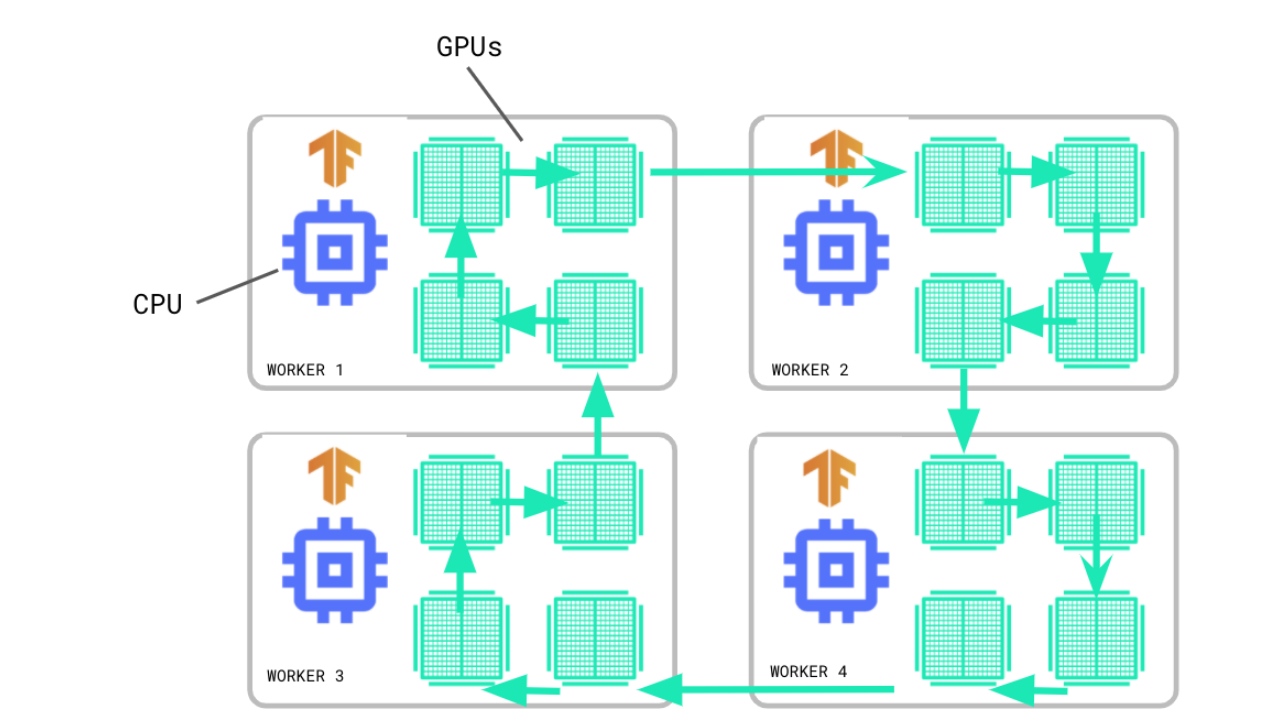

GitHub - sayakpaul/tf.keras-Distributed-Training: Shows how to use MirroredStrategy to distribute training workloads when using the regular fit and compile paradigm in tf.keras.